2438

Views & Citations1438

Likes & Shares

You need an input, a function, and an output to build a simple computer program [8]. This means that a coder or programmer is aware of the input and output that can be obtained by following a predetermined set of rules [9]. In contrast, machine learning relies on model training, wherein the more correlated data is introduced into the system, the more reliable or accurate it becomes. This is because machine learning is based on complex functions that are difficult to code, wherein the input and output need to be related to statistical data points, and wherein the computer itself creates a function that can create input and output pairs [10].

By means of intricate computations that could only be performed by a computer [11]. The most fundamental distinction between traditional computing and machine learning is that the former is predicated on the input and output being explicitly defined, whereas the latter is capable of learning its functions from data [12]. There are three primary models used in machine learning:

- Supervised learning

- Unsupervised learning

- Reinforcement learning

- Supervised learning implies the system takes the pairs of input and output and links them to function; the better the model (input and output with functional relationship), the more correct the function it gets in respect to the input and output [13]. Since it approximates the function between input and output pairs, it is synonymous with the function approximator [14]. When presented with a problem, whether it be one of regression or classification, machines learn by systematically developing label types based on the situation [15]. It is more dependent on model training, which is based on labeled datasets. These datasets are then split into three categories: training, validation, and test. Decision trees, random forests, support vector regression, and so on are all examples of classic machine-learning algorithms used for supervised learning [16,17].

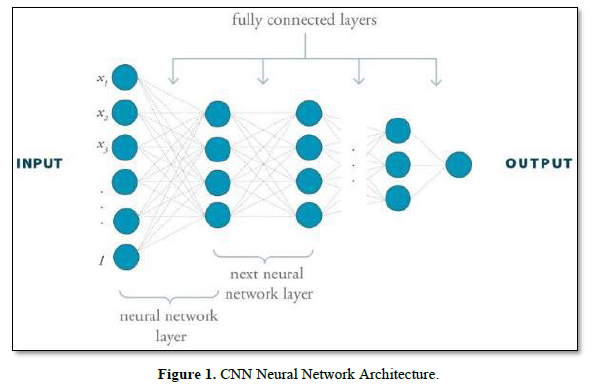

- Unsupervised learning is the process of finding correlations between unstructured data sets. Statistics such as age, gender, height, weight, etc. are examples of structured data, while images and text embeddings are examples of unstructured data. [18] These are the foundations of machine learning as we know it today. Deep learning, which is based on the hierarchical ordering of data or parameters into layers, underpins the majority of today's popular and widely-used machine learning models. This means that before any prediction is produced, features are continually multiplied and added to the outputs from the previous layer. [19] As a result, the features and prediction relation between the layers of the model become more intricate. The loss function tells us how well our machine learning algorithm estimates labels (Figure 1) [20]. To improve outcomes and prediction rates, every machine learning system works to minimize loss. In deep learning, there are various important concepts which are

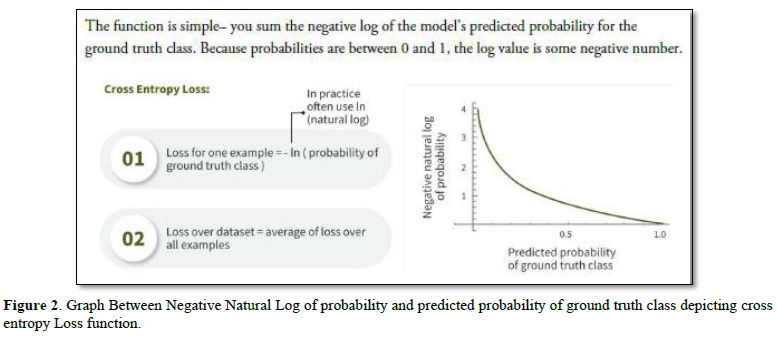

- Cross-entropy loss the function determines the difference between prediction and probability [21].

- Backpropagation it is the key technique of deep learning models in machine learning that breaks down the gradient computation into parts rather than a combined structure [23]. Types of Deep Learning Neural Networks and their Applications:

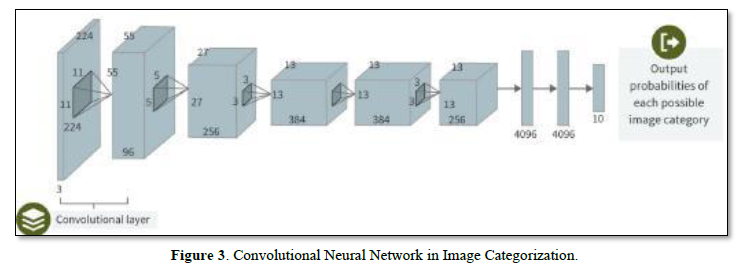

CNN’s Convolutional Neural Network

Designed for an unstructured data set which has Image sets for detection

Uses in dentistry:

Histopathology: Detection of abnormal cells in a histopathologic section in relation to diseases [24].

Clinical diagnosis: Detection of any oral Lesion

Radiology: caries Detection, bone loss, unknown Radiolucency and Radiopacities [25].

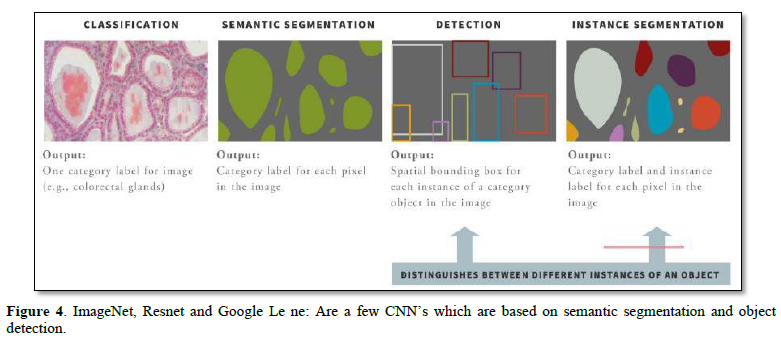

CNN’s Work by dividing the image into pixels and correlates them with the neural network in layers and achieves the results (Figure 3) [26]. NLP or Natural Language Processing Algorithm understands human languages and organizes them it not only recognizes the vocabulary but also the context behind the words [27]. ImageNet, Resnet and Google Le ne: Are a few CNN’s which are based on semantic segmentation and object detection (Figure 4) [28].

- Reinforcement Learning: Because the model is built to anticipate outcomes using external agents and because there is no one best approach to excite the environment in practice, this area of medicine is relatively under-researched.

CONCLUSION

Exploration, discovery of new possibilities and outcomes based on existing data, and the development of other routes for more laborious conventional procedures are at the heart of machine learning. Dentists have access to a plethora of data that can be mined for predictive models, then rolled out globally via user interface, put to work in low-resource environments, and used to provide patients with the best possible care.

- Bissell V, McKerlie RA, Kinane DF, McHugh S (2003) Teaching periodontal pocket charting to dental students: A comparison of computer assisted learning and traditional tutorials. Br Dent J 195(6): 333-336.

- in (2021) The future of dentistry, powered by AI. Accessed on: December 09, 2022. Available online at: https://www.hellopearl.com

- ai (2023) The #1 dental AI platform for Providers & Payers. Accessed on: December 09, 2022. Available online at: https://www.overjet.ai/

- Suzuki K, Yan P, Wang F, Shen D (2012). Machine learning in medical imaging. Int J Biomed Imag 2012: 123727.

- Lorenčík D, Tarhaničová M, Sinčák P (2013) Influence of Sci-Fi films on artificial intelligence and vice-versa, 2013 IEEE 11th International Symposium on Applied Machine Intelligence and Informatics (SAMI), pp: 27-31.

- Panda SK, Mishra V, Balamurali R, Elngar AA (2022) Artificial Intelligence and machine learning in Business Management: Concepts, Challenges, and case studies. Boca Raton, FL: CRC Press, Taylor & Francis Group.

- In Statistical and Machine Learning Approaches for Network Analysis (eds M. Dehmer and S.C. Basak).

- Basic input / output system emulation program. Chronicles OFERNiO [Internet]. Available online at: http://dx.doi.org/10.12731/ofernio.2019.24046

- Green S (2020) From Coder to Programmer, 2020 9th Mediterranean Conference on Embedded Computing (MECO), pp: 1-4.

- Exploring dependencies in complex input and complex output machine learning problems - rare & special e-zone [Internet]. Rare Special eZone. Accessed on: December 11, 2022. Available online at: https://lbezone.hkust.edu.hk/bib/991012936268003412

- Mehdy AK, Mehrpouyan H (2021) A Multi-Input Multi-Output Transformer-Based Hybrid Neural Network for Multi-Class Privacy Disclosure Detection. 2nd International Conference on Advances in Software Engineering (ASOFT 2021).

- Hsin W-J (2015) Learning Computer Networking Through Illustration (Abstract Only). In Proceedings of the 46th ACM Technical Symposium on Computer Science Education (SIGCSE '15). Association for Computing Machinery, New York, NY, USA, pp: 515.

- OECD (2017) There is scope to better match innovation input and output: Global Innovation Index: input-output matrix, 2016, in OECD Economic Surveys: Australia, OECD Publishing, Paris.

- Peck C, Dhawan AP, Meyer CM (1993) Genetic algorithm-based input selection for a neural network function approximator with applications to SSME health monitoring. IEEE Int Conf Neural Networks, pp: 1115-1122.

- Raa T (2006) From input-output coefficients to the Cobb-Douglas function. In the Economics of Input-Output Analysis. Cambridge: Cambridge University Press. pp: 99-107.

- Jia X, Wang L (2022) Attention enhanced capsule network for text classification by encoding syntactic dependency trees with graph convolutional neural network. Peer J Comput Sci 8: e831.

- Dhiman HS, Deb D, Balas VE (2020) Chapter 4 - Supervised Machine Learning Models Based on Support Vector Regression in Wind Energy Engineering, Supervised Machine Learning in Wind Forecasting and Ramp Event Prediction, Academic Press pp: 41-60.

- Shah C (2018) Structured vs unstructured data [Video]. SAGE Research Methods.

- Schneckener S, Grimbs S, Hey J, Menz S, Osmers M, et al. (2019) Prediction of Oral Bioavailability in Rats: Transferring Insights from In Vitro Correlations to (Deep) Machine Learning Models Using in Silico Model Outputs and Chemical Structure Parameters. J Chem Inform Model 59(11): 4893-4905.

- Dembczyński K, Waegeman W, Cheng W (2012) On label dependence and loss minimization in multi-label classification. Mach Learn 88: 5-45.

- Zhou Y, Wang X, Zhang M, Zhu J, Zheng R, et al. (2019) MPCE: A Maximum Probability Based Cross Entropy Loss Function for Neural Network Classification. IEEE Access 7: 146331-146341.

- Zhang J, Zhang Z, Li H, Liu X (2022) Optimizing double-phase method based on gradient descent algorithm with complex spectrum loss function. Opt Commun 514: 128136.

- Daryanavard S, Porr B (2020) Closed-Loop Deep Learning: Generating Forward Models with Backpropagation. Neural Comput 32(11): 2122-2144.

- Slater D (2000) A Clinical Atlas of 101 Common Skin Diseases with Histopathologic Correlation. Histopathology 37: 281-281.

- MacDonald D (2011) Radiopacities. Oral Maxillofac Radiol.

- Nguyen TM, Wu QMJ (2008) Maximum likelihood neural network based on the correlation among neighboring pixels for noisy image segmentation, 2008 15th IEEE International Conference on Image Processing, pp. 3020-3023Volume 9: Number 12: Computer Science & Information Technology (CS & IT). (2022). Retrieved on: December 15, 2022. Available online at: https://airccse.org/csit/V9N12.html

- Salscheider N (2022) Simultaneous Object Detection and Semantic Segmentation. Retrieved on: December 15, 2022. Available online at: https://www.scitepress.org/Link.aspx?doi=10.5220/0009142905550561

QUICK LINKS

- SUBMIT MANUSCRIPT

- RECOMMEND THE JOURNAL

-

SUBSCRIBE FOR ALERTS

RELATED JOURNALS

- Archive of Obstetrics Gynecology and Reproductive Medicine (ISSN:2640-2297)

- Advance Research on Endocrinology and Metabolism (ISSN: 2689-8209)

- International Journal of Radiography Imaging & Radiation Therapy (ISSN:2642-0392)

- Journal of Blood Transfusions and Diseases (ISSN:2641-4023)

- Chemotherapy Research Journal (ISSN:2642-0236)

- Journal of Rheumatology Research (ISSN:2641-6999)

- BioMed Research Journal (ISSN:2578-8892)